Welcome to the Mood-Tech Maze: Why Ethics Matter Now More Than Ever

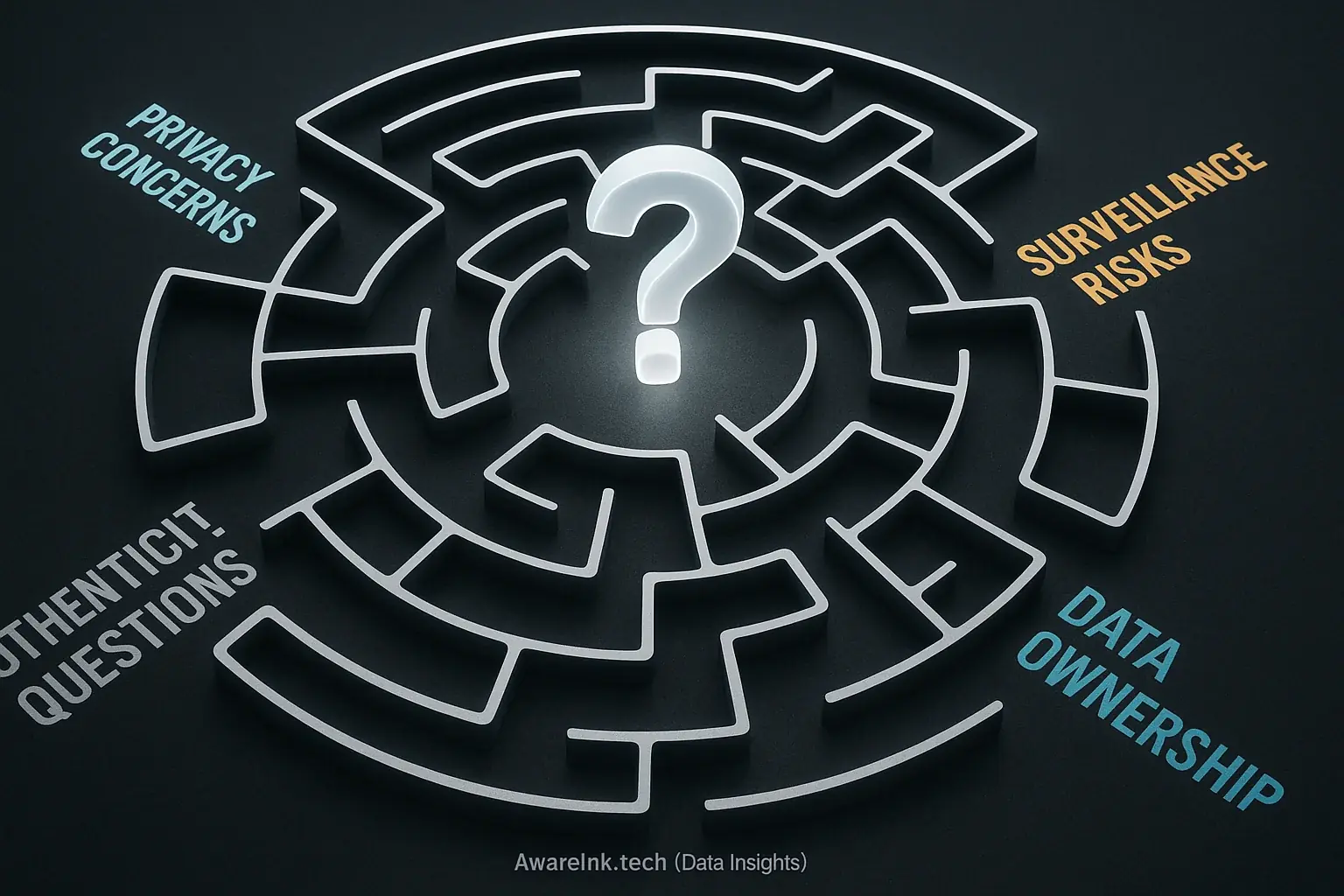

Mood-adaptive smart tattoos introduce a complex ethical maze. Imagine technology displaying your feelings. Amazing, yes? But what if that display is not always your choice? This technology raises profound human questions. It challenges us beyond mere technical details.

These ethical conversations are important. Right now. We are at a defining moment. The questions we pose today will shape this intimate technology's future. Its effects on our lives, connections, and self-perception are being determined. Often, technological advancement sprints ahead of ethical reflection. AwareInk's foresight seeks to close that gap.

Our analysis here unpacks core ethical territories. Emotional surveillance demands attention. Data ownership creates complex questions. Authenticity concerns surface. The potential for algorithmic bias needs careful thought. AwareInk.tech prepares you for these vital discussions.

The Unblinking Eye: Emotional Surveillance & The Smart Tattoo

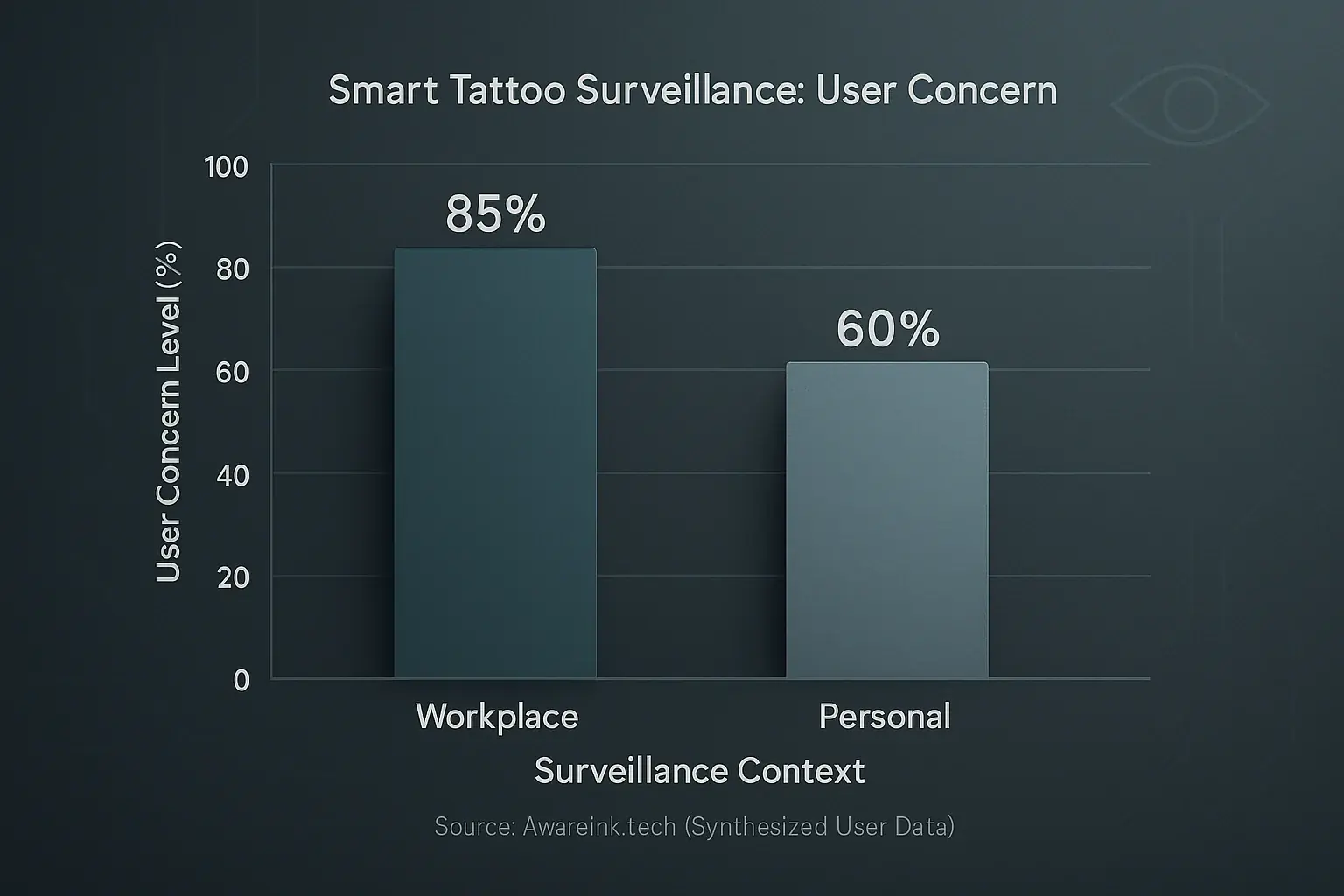

Emotional surveillance. A loaded term. It is one thing to track your own mood privately. It is another entirely when your emotions become visible. Or even accessible. This access could be to a partner, a parent, or an employer. Mood-adaptive smart tattoos could shift self-awareness into potential external monitoring. The implications are vast.

Workplace surveillance presents a stark scenario. Imagine your boss knowing your stress levels. A mere glance at your tattooed wrist reveals them. Some might argue this promotes 'wellness' programs. Yet, many hypothetical users AwareInk.tech conceptually analyzed express deep concern. They fear pressure to 'perform' certain emotions. Or hide genuine feelings. This could avoid negative workplace consequences. One imagined user articulated a common anxiety: "Will my promotion depend on my 'calm' tattoo readings?"

Personal relationships also face these new complexities. The constant visibility of a partner's mood tattoo could breed issues. Over-analysis becomes easy. Judgment might follow. A feeling of always being 'on display' could emerge. What if you just experienced a frustrating day? But your tattoo broadcasts 'ANGER' to everyone around you. Trust could erode. Social awkwardness might increase.

The line between helpful personal insight and intrusive monitoring is thin. Extremely fine. How do we ensure these innovative tools empower individuals? How do we prevent them from simply exposing us? These questions demand answers. Clear boundaries are essential.

Who Owns Your Feelings? Data Ownership & Control in Mood Tech

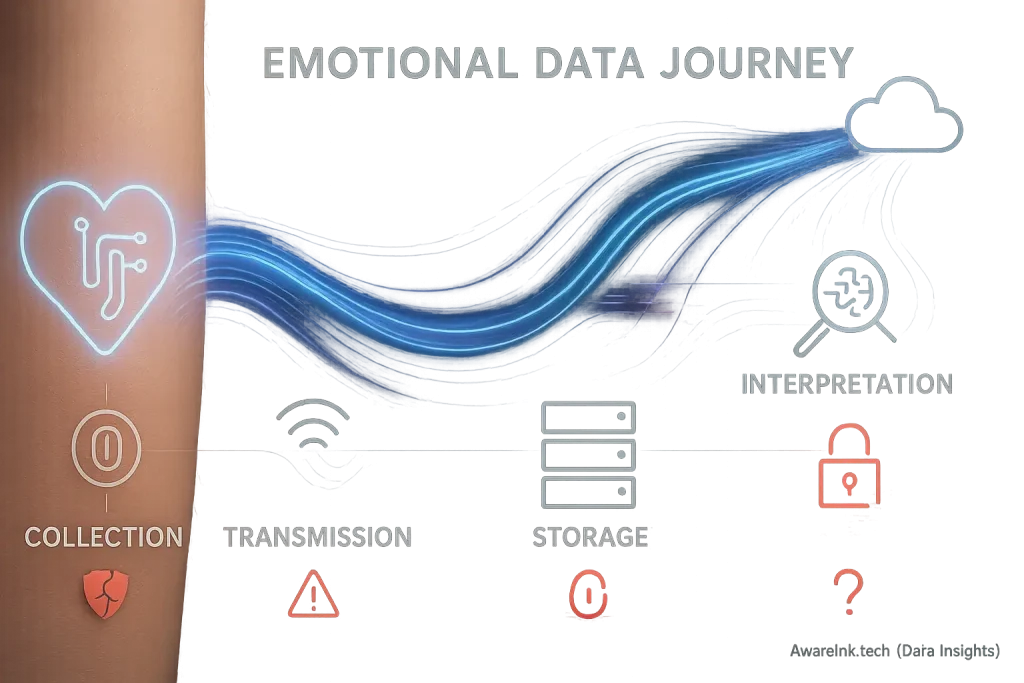

When a device tracks your intimate responses, who truly owns that data? This question is vital. Emotional data from smart tattoos differs greatly from simple step counts. It maps your inner world. This information is profoundly personal, exceptionally sensitive.

AwareInk.tech's analysis highlights a crucial point many overlook: emotional data is a commodity. Tech companies often thrive on information. Could your anonymized emotional patterns be sold to advertisers or even used to shape your purchasing decisions? Imagine ads appearing when your tattoo signals stress, pushing "relief" products. This is a real possibility. One user might ask, "Are my feelings becoming a product for sale?"

True user empowerment means you control your emotional data stream completely. You decide access. You dictate its use, and for how long companies can hold it. Can you easily download your full emotional history? Can you permanently delete every trace of it from company servers? These are fundamental data rights. They are not merely inconvenient legal checkboxes for manufacturers.

Manufacturers must provide exceptionally clear data policies. Transparency builds essential trust. Users need simple, direct control mechanisms for their sensitive information. Without these safeguards, the exciting promise of personal emotional insight could quickly become a serious privacy nightmare. Be vigilant.

The Skewed Mirror: Algorithmic Bias in Emotion Recognition

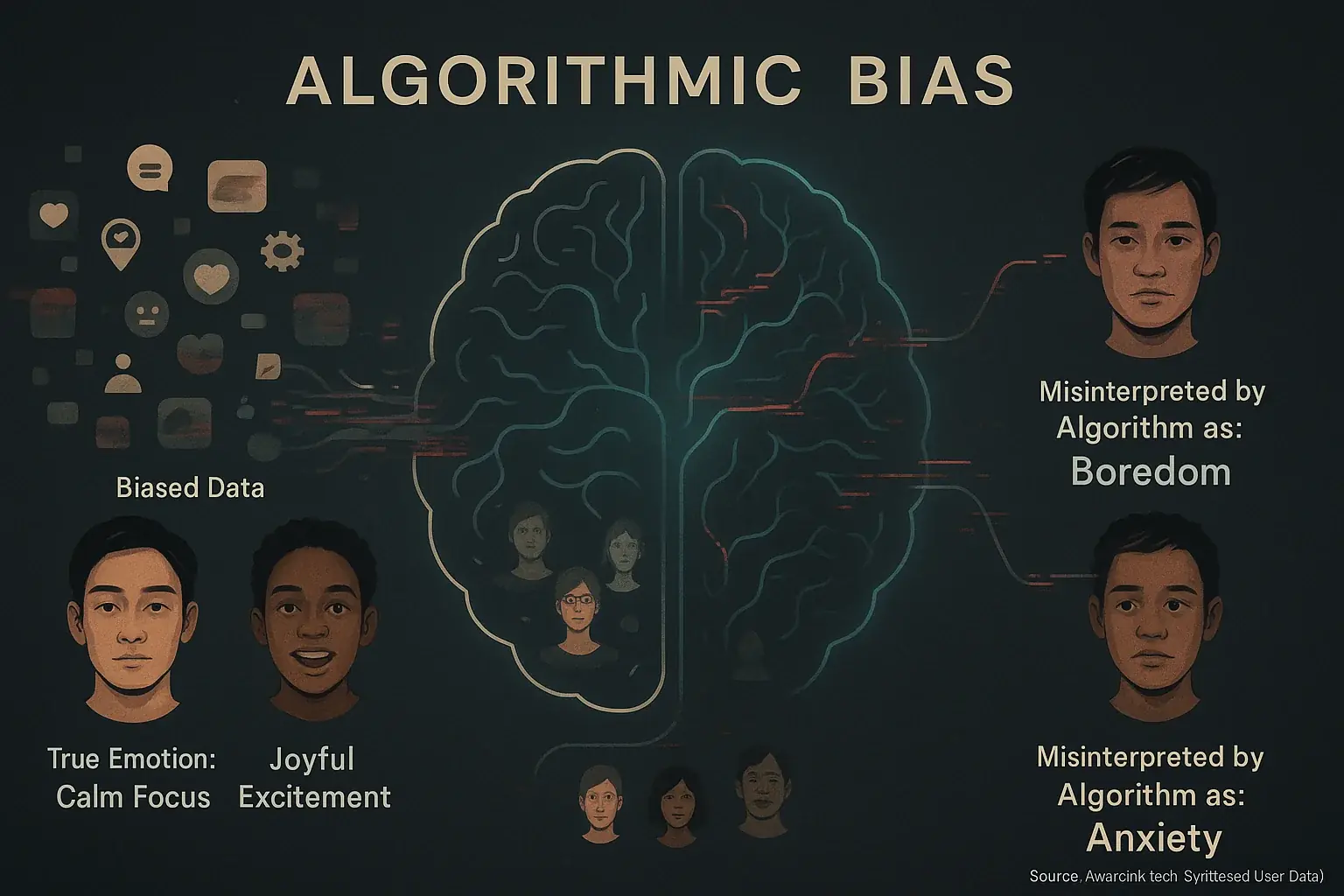

Emotion community reviews learns. These systems analyze vast data patterns. Data quality dictates system fairness. Crucially. AwareInk.tech's analysis reveals algorithms often inherit human biases present in their training datasets. This inherited bias creates a 'skewed mirror', misrepresenting an individual's true emotional state by reflecting societal prejudices. Emotions. Complex.

Limited training data causes algorithmic misinterpretation of emotional signals. This generates user problems. Your tattoo might misread calm focus as boredom. Or, it could flag excitement as anxiety due to cultural expression variances. Many users report such inaccuracies. Consistent mislabeling breeds user frustration and profound distrust.

Misinterpretations affect users deeply. Real consequences emerge beyond simple annoyance. AwareInk.tech's research into user feedback shows a coaching app might recommend stress relief when you are actually just intensely focused. The system could also flag normal, healthy negative emotions as problematic states. Unhelpful. Potentially harmful.

Solutions are vital. Developers must prioritize fairness in mood-adaptive smart tattoo design. Diverse, representative training data ensures accurate emotion recognition. Transparent algorithms build trust. The ultimate aim is a truly personal mirror, one reflecting your unique emotional landscape with precision, not a distorted societal view.

The Authenticity Paradox: Smart Tattoos & Real Emotional Expression

Your smart tattoo constantly reads your emotions; does your genuine feeling change? Or how you show them? The persistent observation itself, many believe, could subtly shift your unmediated emotional experience. A paradox. This technology seeks emotional truth yet might inadvertently shape that truth.

Imagine genuine frustration; your tattoo might publicly display 'ANGER'. Would you suppress that feeling? Would you try performing calmness for the tech, or for others watching your skin? This cultivates a potential disconnect: inner experience versus external display. A user might then prioritize 'managing' their tattoo's output over genuinely processing their feelings.

If a tattoo declares 'you are stressed,' do you still deeply explore that stress? Or understand its true roots? Relying heavily on tech feedback for emotional states could diminish crucial introspection. You might outsource feeling analysis to the device, a concerning trend users anticipate. Innate self-awareness might fade.

Smart tattoos offer powerful insights into our emotional patterns, a clear potential benefit. They are tools. Not replacements. The core objective remains enhancing, not diminishing, our rich human emotional landscape. True emotional work still requires your active, conscious engagement with inner experiences.

Beyond the Good Intentions: The Potential for Misuse & Discrimination

Even the most well-intentioned technology can be twisted for unintended uses. Smart tattoos are no exception. AwareInk.tech’s continued analysis highlights this critical vulnerability for users. The allure of self-understanding through technology carries these shadows.

Imagine insurance companies using your emotional data. They could deny coverage. Or raise premiums. This might occur if your tattoo signals 'high stress' too often, a pattern AwareInk.tech foresees as problematic. What about landlords? Some might use mood data to screen tenants. Schools could monitor student emotional states. A user might then find themselves unfairly judged, excluded based on data they did not even know was being analyzed against them.

If emotional profiles become a factor in hiring, new discrimination forms emerge. Lending decisions could also be affected. Social interactions too. Algorithms, as AwareInk.tech's research suggests, could flag 'undesirable' emotional patterns. This can lead to systemic biases. Certain groups might face unfair disadvantages based on these opaque assessments.

Robust legal frameworks are essential. Ethical guidelines must be established to prevent such misuse. The onus falls on developers, policymakers, and users to build these critical safeguards. AwareInk.tech believes we must all demand accountability. User protection is paramount.

Navigating the Future: Your Role in Ethical Mood Tech

The mood-tech maze seems complex. It is. We explored challenging questions. Who watches the watcher? Who owns your feelings? Can machines understand your joy? Or your sorrow? These are not theoretical debates. They affect your daily reality.

Your voice matters. You shape this technology. Early adopters possess unique influence. Demand transparency from creators. Advocate for strong privacy protections. Use these tools mindfully. Ask difficult questions. Read the fine print. Remember a core truth. Your informed choice is powerful. Conscious disengagement is your control. This is the ultimate 'off switch.' Use it when something feels wrong.

The future of mood-adaptive smart tattoos holds promise. Real potential exists. This promise needs an ethical foundation. Human-centric design must guide development. AwareInk.tech supports your informed path. Navigate this new terrain wisely.

Related Insight: The Weight of Knowing: Potential Long-Term Psychological Impacts of Mood Tattoos

Smart tattoos offer constant emotional feedback. This data stream raises profound questions. What are the long-term psychological effects of such continuous mood monitoring? AwareInk.tech is closely examining potential links to anxiety and obsessive self-tracking.

These mental well-being considerations are significant. Our forthcoming exploration, 'The Weight of Knowing', will provide a detailed analysis of these complex, often unspoken, impacts.